#HIDDEN

import os

try:

import folium

except ImportError as e:

print("occurs:",e)

!pip install folium

import folium

from folium import plugins

import pandas as pd

import numpy as np

import datetime

from folium.plugins import HeatMap

Esplora la heatmap¶

Benvenuto nella nostra heatmap interattiva basata sui dati raccolti dalle risposte degli utenti agli eventi Meetup. Premi il tasto Show Widgets per avviare la visualizzazione. Non importa se premerai quello superiore o quello inferiore, il risultato sarà lo stesso.

NB¶

Il caricamento della mappa potrebbe richiedere anche alcuni minuti, dipendentemente dal traffico.

Non scoraggiarti, cogli l'occasione per leggere le istruzioni.

Cosa posso vedere?¶

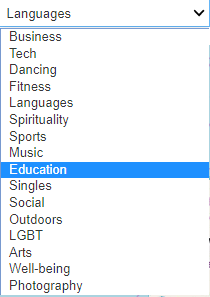

Potrai esplorare la mappa europea osservando la rappresentazione della numerosità degli eventi che hanno trattato un determinato topic. I topic sono gli argomenti trattati durante gli eventi e possono essere molto vari: da Business fino a Tech, da LGBT ad Education.

Sicuri che la vostra mappa non rappresenti semplicemente una mappa di popolazione ?¶

Sicurissimi. Ci siamo premurati di scalare i dati in nostro possesso rispetto alla popolazione residente in macroaree così che le "macchie di calore" non siano direttamente proporzionali alla popolazione in quella zona ma rispecchino effettivamente la proporzione di trattamento di quel topic.

Come posso accedere ai contenuti ?¶

Esplorare i contenuti è molto semplice, ti basta infatti premere sull'apposito widget:

Quando avrai interagito con il menù a tendina ti si presenterà la scelta del topic da selezionare:

A questo punto non ti resta altro da fare che selezionare il topic di interesse ed esplorare liberamente.

#HIDDEN

process=False

#process=True

if process:

data=pd.read_csv("export_european_capitals.csv")

data.head()

data.sort_values("ID_event").head()

print(len(data))

for line in data.itertuples():

if data.iloc[line.Index]['dist']==0:

data['dist'].at[line.Index]=7 #arbitrary for milan

data.head()

data.sort_values(["ID_event","dist"], inplace=True)

data

ddrop=data.drop_duplicates(subset="ID_event" , keep="last")

ddrop=ddrop.drop("ID_member", axis=1)

#ddrop.sort_values("ID_event")

ddrop=ddrop.reset_index()

ddrop.head()

d=data.groupby("ID_event")['dist'].quantile(0.75).reset_index()

print(d.head())

d.rename(columns={'dist':'maxdist'}, inplace=True)

d2=data.groupby("ID_event")['dist'].mean().reset_index()

d2.rename(columns={'dist':'meandist'}, inplace=True)

ddrop=ddrop.merge(d, on="ID_event")

ddrop=ddrop.merge(d2, on="ID_event")

d3=data.groupby("ID_event")['dist'].median().reset_index()

d3.rename(columns={'dist':'mediandist'}, inplace=True)

ddrop=ddrop.merge(d3, on="ID_event")

print(ddrop.head())

d1=data.groupby("ID_event")['ID_member'].count().reset_index()

print(d1.head())

d1.rename(columns={'ID_member':'count_part'}, inplace=True)

ddrop=ddrop.merge(d1, on="ID_event")

del d1

del d2

del d

del d3

del data

ddrop.head()

event_df=pd.read_csv("../../csv/struttura/event.csv")

event_df['date']=str

for line in event_df.itertuples():

try:

event_df['date'].at[line.Index]=str(datetime.datetime.fromtimestamp(int(float(line.time)/1000)).strftime('%c'))

except:

event_df['date'].at[line.Index]="Non disponibile"

#event_df.head()

ddrop=ddrop.merge(event_df, left_on="ID_event", right_on="_key")

#ddrop.head()

del event_df

topic_df=pd.read_csv("event_topic_keywords.csv")

ddrop=ddrop.merge(topic_df,left_on="ID_event", right_on="ID").reset_index().drop("level_0",1)

del topic_df

ddrop.to_csv("post_proc.csv")

else:

ddrop=pd.read_csv("data/post_proc.csv")

#ddrop1=pd.read_csv("post_proc.csv")

#ddrop.head()

#topic_df.head()

#HIDDEN

for line in ddrop.itertuples():

if line.Country=="it":

#print(line.g_city)

if line.g_city=="Milano":

ddrop['Country'].at[line.Index]="it_Milano"

if line.g_city=="Roma":

ddrop['Country'].at[line.Index]="it_Roma"

#ddrop[ddrop['Country']=="it_Milano"]

#HIDDEN

country_list=list(ddrop.Country.unique())

#print(len(country_list))

d=ddrop.groupby("Country")['ID_event'].count()

rm1_list=[]

for elem in country_list:

if d[elem] < 50:

rm1_list.append(elem)

manual_rm_list=["ca", "ge", "ru", "cy", "us", "bg", "tr", "by", "am", "rs", "sk","si", "li", "mk", "md", "ba", "gi", "ad", "hu", "hr", "ro", "no", "fi"]#out of UE borders, or just too few events in there

rm_list=list(set(rm1_list+manual_rm_list))

#print(tot_list)

for elem in rm_list:

country_list.remove(elem)

#HIDDEN

pop={

'fr':2141000,

'es':3174000,

'gb':8136000,

'nl':821752,

'se':965232,

'de':3575000,

'cz':1281000,

'pl':1765000,

'ie':544107,

'be':174383,

'lu':114303,

'no':634293,

'hu':1756000,

'dk':602481,

'ch':133115,

'it_Milano':1352000,

'at':1868000,

'fi':631695,

'pt':504718,

'ro':1836000,

'gr':3074160,

'it_Roma':2865000,

'ua':2884000,

'hr':792875

}

#HIDDEN

ddrop=ddrop.loc[ddrop['Country'].isin(country_list)]

#HIDDEN

count_df=ddrop.groupby('Country', as_index=False).count()

count_df=count_df[['Country', 'index']].reset_index()

count_df['norm_count']=0

#HIDDEN

for line in count_df.itertuples():

norm_count=(line.index/pop[line.Country])*100000

#norm_count

count_df.norm_count.at[line.Index]=norm_count

#count_df

#HIDDEN

capitals={

'fr':"Paris",

'es':"Madrid",

'gb':"London",

'nl':"Amsterdam",

'se':"Stockholm",

'de':"Berlin",

'cz':"Praga",

'pl':"Varsaw",

'ie':"Dublin",

'be':"Bruxelles",

'lu':"Luxemburg",

'no':"Oslo",

'hu':"Budapest",

'dk':"Copenaghen",

'ch':"Berna",

'it':"Rome",

'at':"Vienna",

'fi':"Helsinki",

'pt':"Porto",

'ro':"Bucarest",

'gr':"Athens",

'ua':"Kiev",

'hr':"Zagabria",

}

#HIDDEN

from ipywidgets import interact

go=True

if go:

topic_list=[]

for line in ddrop.itertuples():

#keywords=line.Keywords.split(",")

#print(keywords)

for topic in line.Keywords.split(","):

Topic=[]

topic=topic.replace("[","").replace("]","").replace("'","").replace(",","").replace(" ","")

Topic.append(topic)

for topic in Topic:

if topic not in topic_list:

topic_list.append(topic)

count_dict={}

#print(topic_list)

topic_list_rm=["","Pets", "Food&Drink", "Scifi", "Beliefs", "Crafts","BookClubs", "Support", "Movements", "Games", "Films", "Lifestyle",'Moms&Dads', 'Auto', 'Paranormal', "Community", "Fashion"]

for elem in topic_list_rm:

try:

topic_list.remove(elem)

except:

print(elem)

#print(topic_list)

#HIDDEN

import json

process=False

if process:

for country in count_df.Country:

#print("#####\n",country, "\n#####\n")

punt_count_dict={}

for topic in topic_list:

#print(topic)

count_topic=0

for line in ddrop.itertuples():

if line.Country==country:

keywords=line.Keywords

#print(keywords)

for word in keywords.split():

topic_curr=word.replace("[","").replace("]","").replace("'","").replace(",","").replace(" ","")

#Topic.append(topics)

if topic_curr==topic:

count_topic+=1

#print(count_topic)

#print(topic,count_topic)

punt_count_dict[topic]=count_topic

#print(punt_count_dict)

count_dict[country]=punt_count_dict

#print (topic)

#print (type(line.v_lat))

#heat_data.append([float(line.v_lat),float(line.v_lon)])

with open('count_topic_dict.json', 'w') as f:

json.dump(count_dict, f)

else:

with open('data/count_topic_dict.json') as f:

count_dict = json.load(f)

#count_dict

#HIDDEN

#normalization over topics

process=False

if process:

norm_count_dict={}

for country in count_dict:

normalization=pop[country]

#print(normalization)

norm_topic_dict={}

for topic in count_dict[country]:

#print(count_dict[country][topic]) #accessing single values

norm_topic_dict[topic]=int((count_dict[country][topic]/normalization)*200000)

norm_count_dict[country]=norm_topic_dict

with open('norm_count_topic_dict.json', 'w') as f:

json.dump(norm_count_dict, f)

else:

with open('data/norm_count_topic_dict.json') as f:

norm_count_dict = json.load(f)

#norm_count_dict

#HIDDEN

go=False

if go:

@interact(topic=topic_list)

def show_canned_examples(topic):

lat_df=ddrop[['v_lat', 'v_lon']]

print(len(ddrop))

str(lat_df.v_lat[0]) == "nan"

for line in lat_df.itertuples():

if str(line.v_lat) == "nan":

lat_df['v_lat'].at[line.Index]= ddrop['g_lat'].at[line.Index]

if str(line.v_lon) == "nan":

lat_df['v_lon'].at[line.Index]= ddrop['g_lon'].at[line.Index]

len(lat_df)

lat_df.isna().any()

lat_df=lat_df.astype(float)

from folium.plugins import HeatMap

map_hooray = folium.Map(location=[ddrop['v_lat'].mean(),ddrop['v_lon'].mean()], zoom_start=5)

# List comprehension to make out list of lists

#heat_data = [[row['v_lat'],row['v_lon']] for index, row in lat_df.iterrows()]

heat_data=[]

for line in lat_df.itertuples():

if ddrop.Country.at[line.Index] in country_list:

keywords=ddrop.Keywords.at[line.Index]

#print(keywords)

for topics in keywords.split():

topic_curr=topics.replace("[","").replace("]","").replace("'","").replace(",","").replace(" ","")

#Topic.append(topics)

if topic_curr==topic:

#print (topic)

#print (type(line.v_lat))

heat_data.append([float(line.v_lat),float(line.v_lon)])

# Plot it on the map

HeatMap(heat_data).add_to(map_hooray)

# Display the map

map_hooray.save("/home/dario/NeoMeetup/Visualizations/map/heat_try.html")

display(map_hooray)

#HIDDEN

from ipywidgets import interact

go=False

if go:

topic_list=[]

for line in ddrop.itertuples():

#keywords=line.Keywords.split(",")

#print(keywords)

for topic in line.Keywords.split(","):

Topic=[]

topic=topic.replace("[","").replace("]","").replace("'","").replace(",","").replace(" ","")

Topic.append(topic)

for topic in Topic:

if topic not in topic_list:

topic_list.append(topic)

#print(topic_list)

@interact(topic=topic_list)

def show_canned_examples(topic):

lat_df=ddrop[['v_lat', 'v_lon']]

print(len(ddrop))

str(lat_df.v_lat[0]) == "nan"

for line in lat_df.itertuples():

if str(line.v_lat) == "nan":

lat_df['v_lat'].at[line.Index]= ddrop['g_lat'].at[line.Index]

if str(line.v_lon) == "nan":

lat_df['v_lon'].at[line.Index]= ddrop['g_lon'].at[line.Index]

len(lat_df)

lat_df.isna().any()

lat_df=lat_df.astype(float)

from folium.plugins import HeatMap

map_hooray = folium.Map(location=[ddrop['v_lat'].mean(),ddrop['v_lon'].mean()], zoom_start=4)

# List comprehension to make out list of lists

#heat_data = [[row['v_lat'],row['v_lon']] for index, row in lat_df.iterrows()]

heat_data=[]

for row in count_df.itertuples():

norm_count=0

for line in lat_df.itertuples():

if ddrop.Country.at[line.Index] == row.Country:

norm_count+=1

keywords=ddrop.Keywords.at[line.Index]

#print(keywords)

for topics in keywords.split():

topic=topics.replace("[","").replace("]","").replace("'","").replace(",","").replace(" ","")

#Topic.append(topics)

if topic in topic_list:

#print (topic)

#print (type(line.v_lat))

heat_data.append([float(line.v_lat),float(line.v_lon)])

if norm_count==row.norm_count:

print("for country {} inserted {} events".format(row.Country, norm_count))

break

# Plot it on the map

HeatMap(heat_data).add_to(map_hooray)

plugins.Fullscreen(

position='bottomright',

title='Expand me',

title_cancel='Exit me',

force_separate_button=True

).add_to(map_hooray)

# Display the map

map_hooray.save("/home/dario/NeoMeetup/Visualizations/map/heat_try.html")

#heat_dict[]

display(map_hooray)

#HIDDEN

lat_df=ddrop[['v_lat', 'v_lon']]

#print(len(ddrop))

str(lat_df.v_lat[0]) == "nan"

for line in lat_df.itertuples():

if str(line.v_lat) == "nan":

lat_df['v_lat'].at[line.Index]= ddrop['g_lat'].at[line.Index]

if str(line.v_lon) == "nan":

lat_df['v_lon'].at[line.Index]= ddrop['g_lon'].at[line.Index]

len(lat_df)

lat_df.isna().any()

lat_df=lat_df.astype(float)

#HIDDEN

process=False

if process:

heat_df = pd.DataFrame(columns=["topic", "v_lat", "v_lon", "Country"])

topic_map_dict={}

for topic in topic_list:

print("#######\ntopic is ", topic, "\n######")

map_hooray = folium.Map(location=[ddrop['v_lat'].mean(),ddrop['v_lon'].mean()], zoom_start=5)

# List comprehension to make out list of lists

#heat_data = [[row['v_lat'],row['v_lon']] for index, row in lat_df.iterrows()]

heat_data=[]

for country in count_df.Country:

norm_count=0

for line in lat_df.itertuples():

if ddrop.Country.at[line.Index] == country:

keywords=ddrop.Keywords.at[line.Index]

#print(keywords)

for topics in keywords.split():

curr_topic=topics.replace("[","").replace("]","").replace("'","").replace(",","").replace(" ","")

#Topic.append(topics)

if curr_topic == topic:

#print ("appending")

#print (type(line.v_lat))

#heat_data.append([float(line.v_lat),float(line.v_lon)])

heat_df=heat_df.append({"topic":topic,

"v_lat":line.v_lat,

"v_lon":line.v_lon,

"Country":country}, ignore_index=True)

norm_count+=1

#print(norm_count)

#print

if norm_count==norm_count_dict[country][topic]:

print("for country {} inserted {} events".format(country, norm_count))

break

if norm_count==norm_count_dict[country][topic]:

break

#print("for country {} inserted {} events".format(country, norm_count))

heat_df.to_csv("heat_df.csv", index=False)

heat_df.head()

#HIDDEN

go=False

if go:

from folium.plugins import HeatMap

topic_map_dict={}

for topic in topic_list:

map_hooray = folium.Map(location=[ddrop['v_lat'].mean(),ddrop['v_lon'].mean()], zoom_start=5)

heat_data=[]

for country in count_df.Country:

norm_count=0

for line in heat_df.itertuples():

if line.Country == country:

if line.topic == topic:

heat_data.append([float(line.v_lat),float(line.v_lon)])

# Plot it on the map

HeatMap(heat_data).add_to(map_hooray)

plugins.Fullscreen(

position='bottomright',

title='Expand me',

title_cancel='Exit me',

force_separate_button=True

).add_to(map_hooray)

# Display the map

topic_map_dict[topic]=map_hooray

@interact(topic=topic_list)

def show_canned_examples(topic):

return topic_map_dict[topic]

#HIDDEN

try:

heat_df=pd.read_csv("heat_df.csv")

except:

heat_df=pd.read_csv("https://raw.githubusercontent.com/DBertazioli/NBinteract/master/heat_df.csv")

from folium.plugins import HeatMap

topic_map_dict={}

@interact(topic=topic_list)

def show_canned_examples(topic):

map_hooray = folium.Map(location=[ddrop['v_lat'].mean(),ddrop['v_lon'].mean()], zoom_start=5)

heat_data=[]

for country in count_df.Country:

norm_count=0

for line in heat_df.itertuples():

if line.Country == country:

if line.topic == topic:

heat_data.append([float(line.v_lat),float(line.v_lon)])

# Plot it on the map

HeatMap(heat_data).add_to(map_hooray)

plugins.Fullscreen(

position='bottomright',

title='Expand me',

title_cancel='Exit me',

force_separate_button=True

).add_to(map_hooray)

# Display the map

return map_hooray

The map is built using folium API to leaflet.

View the full code here.